We’ve all been in meetings where there are plenty of people with great ideas on how to fix or improve things. Maybe you want to improve your website conversion rate, or add a new customer-requested feature, or find a creative way to fix a reported bug.

Most of the time, these suggestions are based on gut instinct or past experience. Starting with a hypothesis and a suggested solution—for example, “Adding the ratings to a category page may increase our conversion rate”—is the right place to start. But the theory is just a theory until you can confirm it with an actual revenue increase. That’s where A/B testing comes into play.

A/B testing allows you to show multiple versions of a webpage to your site visitors to determine which user experience generates the best results. Today, we’re going to show you exactly how the A/B testing process works, using a few tests we recently ran with Savannah Bee Company. For these examples, we used Optimizely.

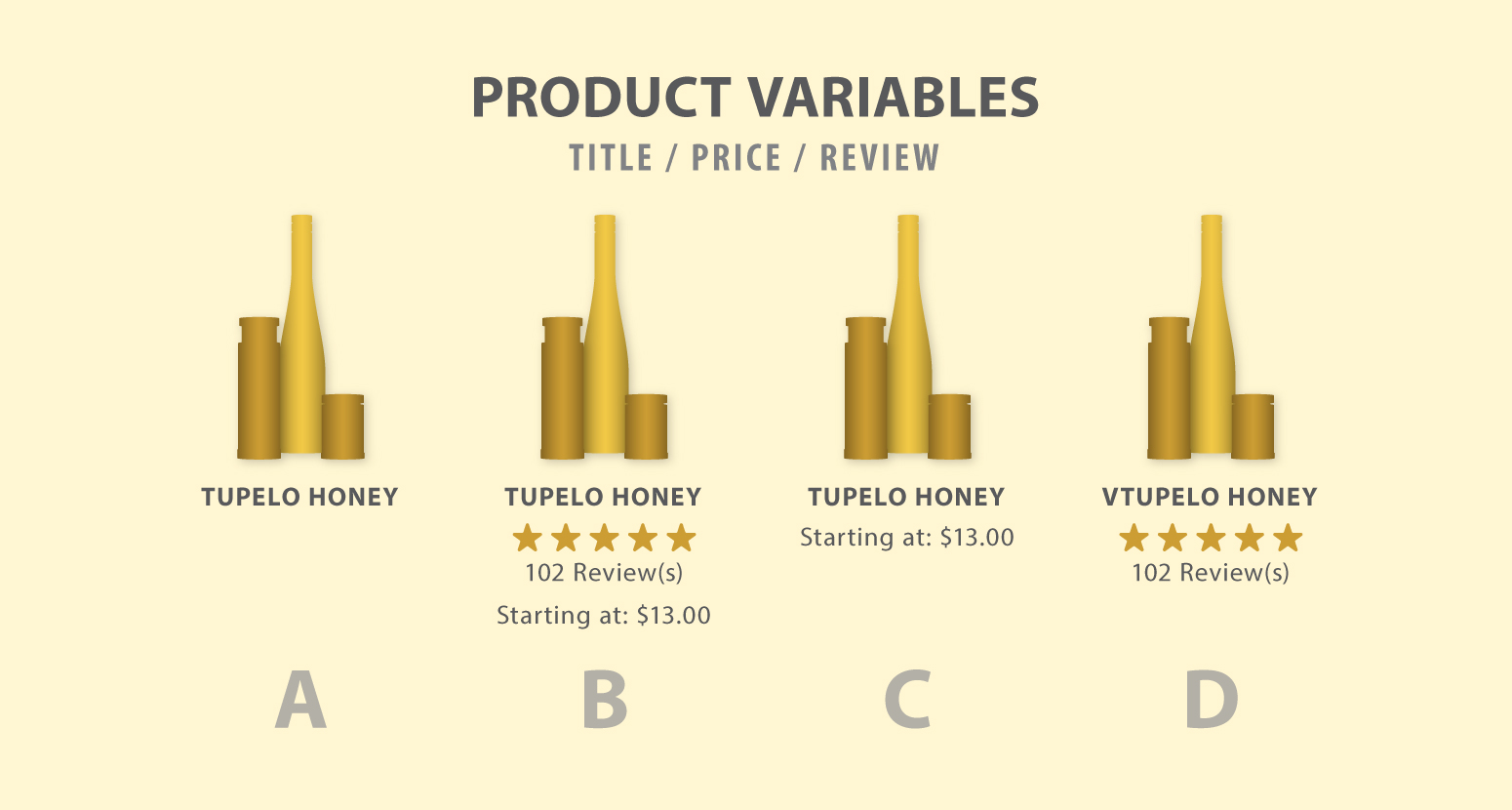

1. Price and Ratings on Category Pages

For this test, our hypothesis was that adding ratings, prices, or both to our product category pages would increase conversion rates. To test it, we ran three variations, plus the control, which showed only the product name.

Version D, ratings only, emerged as the clear winner. It increased conversion rate by 9.7% and revenue by 37% while all other variations lowered conversion rate and revenue. With these results, we were able to confidently add ratings to our category pages without wondering if we left any revenue on the table.

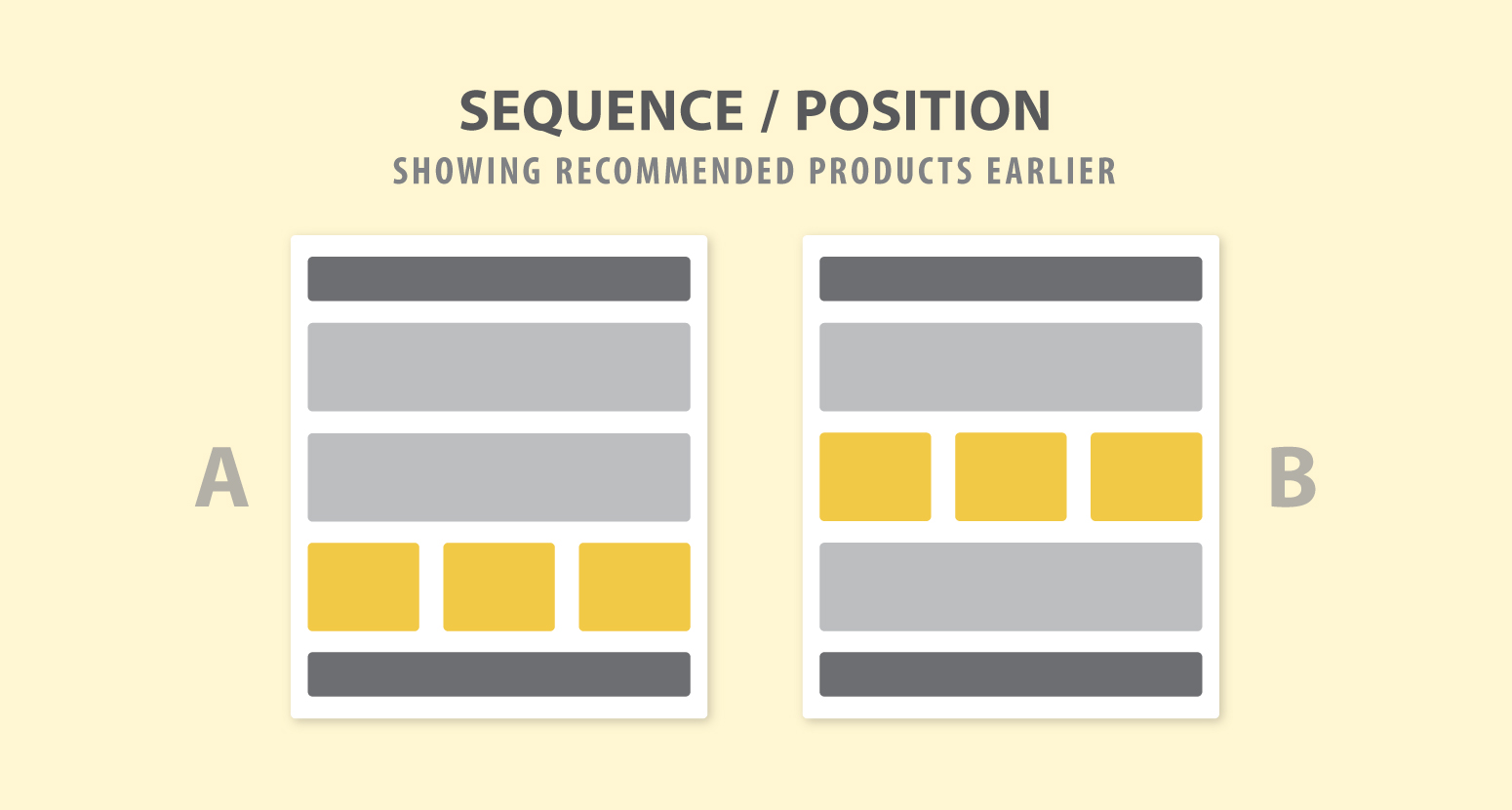

2. Recommended Products Position

On the original design for our product detail pages, the recommended products appeared about three-quarters of the way down the page, below some additional information. We suspected that moving these recommended products to a higher position would increase their visibility and effectiveness. We tested this by running both versions.

Version B lifted our conversion rate by almost 4% and increased revenue by 4.4%. We again had a clear winner.

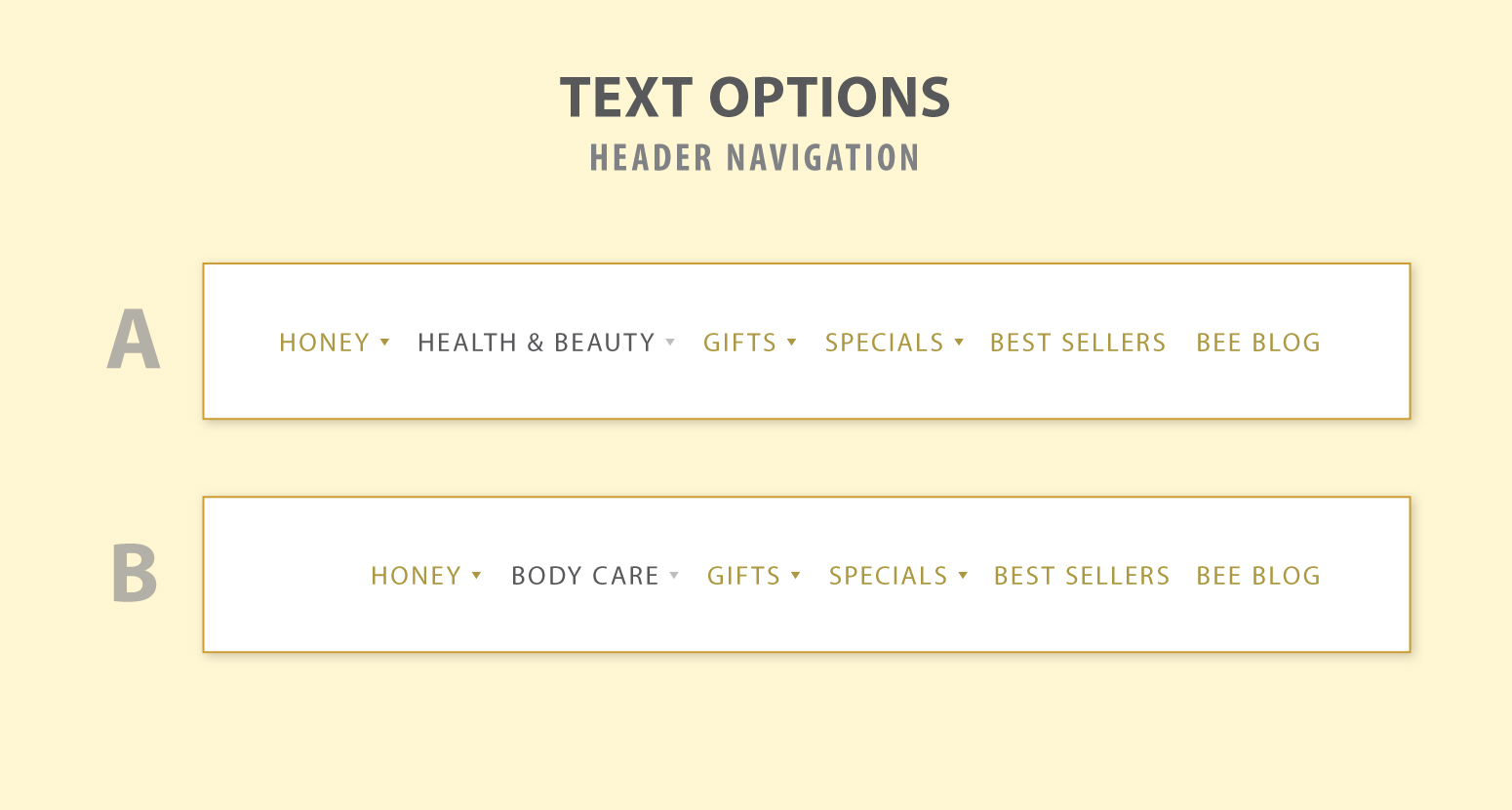

3. Navigation Copy

The last hypothesis was that we could drive more traffic and sales to our Health & Beauty category by renaming it “Body Care” in the main navigation.

We launched the test, showing the new variation to 50% of our traffic, and found that it decreased conversion rate by over 7%. After seeing that our customers didn’t respond to this change in the way we anticipated, we safely steered clear and kept our conversion rate where it was.

This example clearly shows one of the most valuable reasons for running A/B tests: you can’t always trust your gut instinct, no matter how well you know your customer base. Testing can help you avoid making costly mistakes that negatively impact your user experience.

We hope these examples illustrate how valuable A/B testing can be and inspire you to run some tests of your own. If you’re interested in learning more about A/B testing and conversion rate optimization, don’t hesitate to give us a shout!